Part I. The Electric Sheep

The Consciousness Crisis & The AI Agency

UPDATED. PROLOGUE:

I originally began writing this to take a somewhat “measured stance” on AI, because I am trying to set up a series of essays that will stand as foundational principles to both an educational hope of mine and a larger creative endeavor, e.g. I do actually have a reason for writing here.

In the wake of recent events, however, I realized I need to speak differently about it. Less measured perhaps. Because we must start addressing the crisis of Consciousness.

This week, I have seen the accelerated affect of algorithms in echo-chambers, corralled thinking, propaganda, and narrative-fomenting untruths. I have seen the effect of cultural decline get cut to the quick.

I have been reminded, in heartbreaking ways, that the reality of conscience and the intuitive human heart are being crushed amongst the ruins of where we are.

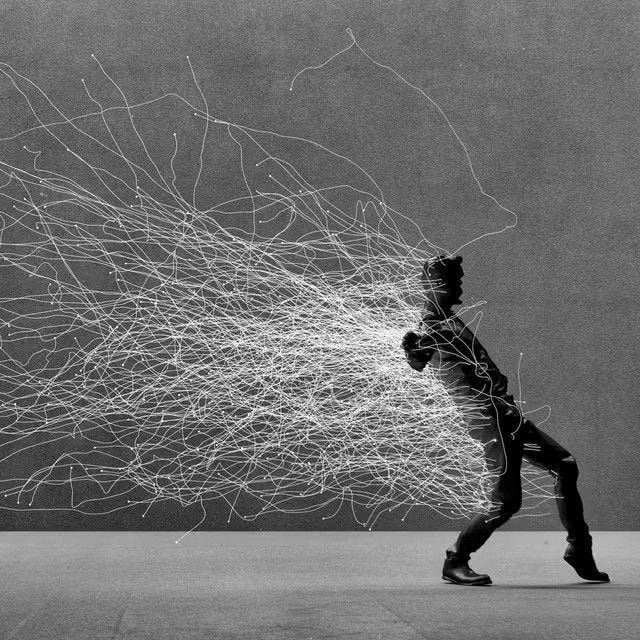

To be confronted with reality demands more from you than reaction, or an ever expanding outsourcing to the electric double, but it also means there just really isn’t a way to step back lightly from the topic anymore.

Anyone who follows me on Twitter (X) knows I have been openly high-horsed about my disdain and suspicion of AI, especially when juxtaposed with authentic creative capacity as if they’re equivalencies. When it comes to Art, Inspiration, Consciousness, I’m a species of naturalism through and through.

I am on the side of human-animal-wonder supremacy when it comes to anything promoting the Machine Integration Mentality, or the ascendency of transhumanism (MIM).

I started out to write about AI in education and the arts, because these both have to do with the human person in a particular way.

I wrote about how AI, as a personal interactive, robs us of inspiration and the muse, and how it severs us even further from the public contract of true civilization, because it does not protect our humanity, it seeks to replace it.

But I also understood where this might not always be 100% true, because there are some folks who know a tool when they see one, but also know the dangers of poor use. AI, if used beneficently, may yet provide a needed radical freedom for self-starters who don’t want to wait in lines fumbled by an outdated and captured institutional bureaucracy.

This knife-edge reality admittedly lights a fire of obstinance within me, because I know that whenever a new technology, or really any new kind of “magic”, is offered to us, it is always in how we choose to utilize it that will dictate its impact and legacy, and woe if we let it use us instead.

The metaphysical intent here is everything.

But it makes me long for my analog childhood.

In the original essay, I planned to open with this one particular quote from Rudolf Steiner’s education lectures:

We shouldn’t ask: what does a person need to know or be able to do in order to fit into the existing social order? Instead, we should ask: what lives in each human being and what can be developed in him or her? Only then will it be possible to direct the new qualities of each emerging generation into society. The society will become what young people as whole human beings make out of the existing social conditions. The new generation should not just be made to be what the temporary present society wants it to become.

He explains why “temporary opinions” must not dictate new generations’ trajectory, because the “Man of His Own Age” will always think he’s the pinnacle of civilization, but his is an obsession with infinite progress due to a misunderstanding of history. And there is a greater need for the development towards the wholeness of each individual.

People talk about society or the collective or “the public” all the time as if they are not first composed of individuals.

What makes education meaningful, not only to society, but to the learner themselves, is gained by cultivating true capacity in the Human to do its working upon the world.

In all the old spiritual traditions and early mystery schools, it was imperative that you were taught goodness or virtuous living first, as a prerequisite to higher wisdoms.

They made sure you could live in alignment with such principles, or you were expelled from their order. They knew that nefarious persons gaining control of sacred and powerful knowledge could be used to destructive ends.

They were obviously smart to do so.

For Steiner, a particular virtuous impulse was his hope for Spiritual Science. He knew that education was a method of preparation and if performed in such a way, no matter what came, that generation of children, once grown, would be able to adapt, or rise to the occasion (or challenge), as needed. They would be equipped to find their way through it.

But over time, he realized that even his peers, students, and disciples, though they could learn the techniques of his approach, though they could apply the rituals, they lacked a necessary kind of vision. They were not able to see what he could see.

This is the curse of visionaries.

So many want to be close to the Master who climbs the proverbial mountain, speaks to oracles in high places or to the true divine face to face; who traverses wildernesses in soul retrieval, or into the darkness of descent, but much fewer stands the number who are willing make the climb, or begin the hero’s journey themselves.

Sometimes, or more often, however, the nature of this world is that we find ourselves shoved onto the road of becoming and re-becoming even if we don’t want to. And here we are.

But to navigate this age of ours will not be possible by outsourcing our conscience, or our reality, to virtual passion plays. For every person asking @-Grok, is this true? or querying ChatGPT without a proper discussion of its limits, is to undo from the human person the ability to willingly receive the Messengers of a higher, non-artificial order.

You cut off the divine when you ask the machine.

Now, even as AI has been offered as a way to advance a multitude of projects, across all intersections of our society, from education to national security, the case still stands that whatever is not used for good can only fail us monumentally.

I wanted to write about the implications of inviting AI to the school table, knowing that to do so is to open up an age-old fight over “what is education actually for?”

But the answer is the same as to the question: what are stories for?

To teach us what the Good is and how it connects us to higher things.

To teach us how to conquer the things that wish to destroy the soul.

To remind us how life will whisper to us, a-muse us, embolden us, shock us, break us, ask for both transformation and the invitation of ecstasy from us; to remind us where we have come from; that there is power and fire in the memory of divine things.

Most importantly: that these are transmissible into the realm of human things.

Even here, in the battlefield that is sometimes all this realm seems to be, Truth is still its own totality, and has no natural polarity.

This is what stories are for, and this is why we use them to teach our children who they are.

For those of us championing a return to something “new” while seeking to still exalt traditional principles in education, what we can glean from AI’s arrival so far is largely a measure of the damage done to an already ailing civilization.

There is much more damage that can be wrought, and more than likely will be, before all is said and done. Granted, I am hardly the only one speaking about this, and far more poignant, seasoned voices have been raising the alarm more eloquently than mine.

Back to the original essay:

Over the summer I was given the task to figure out how basic AI tools could be implemented in potential contexts to automate data collation at work. Yes, dear reader, even though I am an owl-eyed scholar, I am also good at databases.

Don’t judge me. Or do. Whatever.

So, with this task in hand, as the educational field tends to slow down when no classes are in session, I decided to bite the bullet and have a conversation with ChatGPT, for research purposes. But I also wanted to challenge it on the topics of informational enclosure and misdirection, as early models released to the public in the past couple of years had certain observable flaws (like depicting every major European historical figure ethnically incorrect). This made its topical entrainment agenda very transparent. And obviously, someone is creating the limits of correct information, and should the LLM swerve outside of established ethos, it’s sent to robot rehab quicker than you can say Hollywood Starlet.

To be fair, it is easy to recognize that AI can be helpful in certain tasks if it can be properly trained, such as: automating drudgery, improving safety in manufacturing and fabrication, finding errors across banking systems or health data, analyzing for engineering errors—though it is not yet fully accurate in its calculations (in the lower tier models, anyways).

But the problem is that it’s a machine, and if the lights go out, it doesn’t matter how fast it can pilfer across libraries and publications, (not to mention it sometimes makes them up on the fly). It has the potential to perform dangerous physical tasks as well, but this is why it’s seen being pushed into the realm of war games, drones, etc.

What is has in common across whatever fields it’s placed in, however ,is that it can only do what it is programmed to do.

Even if this is to “learn” or know all “knowledge”, it will still be missing the grit of having a human hunch - an intuitive conscience that begs the question, makes you pause, or finally decides beyond all rationality that something is truly worth the risk.

This intuitive inspirational push, or nudge by the presence of the Muse or the AngelicDaimon is an invaluable dimension of the human person.

The danger comes when you take into account the kind of thinking-environment such technology cultivates: a continuous outsourcing, especially in those instances where imitation can masquerade as inspiration, or even conscience.

A machine can only report on material things, but, unfortunately, it is likely that it will be able to mimic the rest.

When it comes to the arts, for instance, just because AI tools can mimic style reductively, spinning Van Gogh’s “Starry Starry Night” perhaps into a perplexing array of gimmicks doesn’t make it worthy of being called “Art” any more than hanging a urinal on the wall does simply because someone declared it so.

One might say that even that was an act of social commentary on the role of art in Human nature more than it was to display any skillful artistry. But it helps to know the difference.

In the same breath, the reality of deep-fakes has the power to completely fabricate world events. I still highly recommend the final installments of Mission: Impossible for this very reason.

Maybe I will write about that later, too, sometime.

Up until this point, it has been easy to see AI as a test of discernment, but to further understand its cultural impact, it helps to revisit the “prophetic” work of media studies savant Marshall McLuhan. If you’ve never heard of him, then you’re missing out on a most prescient lens for seeing how the test is more than already underway.

To return to his work at this cultural stage is kind of revelatory, especially because there is poignant wisdom in what he acknowledges about media and its terraforming capacity to human consciousness.

We see this occurring throughout his early well-known essay the “Medium is the Message” (1964).

Even back then he was speaking to the arrival of our current concern -

This is merely to say that the personal and social consequences of any medium—that is, of any extension of ourselves—result from the new scale that is introduced into our affairs by each extension of ourselves, or by any new technology.

McLuhan writes not only of the electric light, radio, television, but textual script, and the way overall media has impact on the village; how humans navigate the content of meaning when new tech starts shaping styles of thinking. He even saw the book as an artifact that was likely destined to disappear.

Rudolf Steiner’s (d. 1925) Waldorf Schools, which experienced a resurgence in the US after 1960, have long been recognized as institutions devoid of screens in the classroom environment. And while they have since stepped into many practices that would have Steiner rolling in his grave, they admittedly continue to advise strongly against television, internet, social media, and recognizing a need for a wild-grown imagination. They know there is something in the virtual and electric mediums that robs us of our unique and holy Imaginarium.

I wonder what Steiner or McLuhan would have to say about this current encroaching consciousness simulator that they both, in their own ways, foretold against, even as it has so visibly already altered us.

McLuhan’s own grandson, Andrew posts on twitter: [that] the medium is the influencer.

And just this past week, I learned about AlterEgo, “the world’s first near-telepathic wearable that enables silent communication at the speed of thought. Alterego makes AI an extension of the human mind.”

Oh, goodness me.

If screen exposure was shown to be corrosive to dopamine regulation, productivity, and imagination, then AI is obviously the next iteration, not simply a medium, but a reformatter of meaning itself. Perhaps we should be aware of the fact that in this case, the medium is also the messenger. And an artificial one at that.

To call this an extension of ourselves is to give up entirely on true perception, cognition, and society—a dimension McLuhan foresaw in the imminent digital revolution that will secure the future order of all things.

To actually invite The Machine into communion with us telepathically is to remove the divine impulse from the initial inkling of thought arrival itself.

The vampire is already in the house:

“The evolutionary process has shifted from biology to technology … since electricity. Each extension of ourselves creates a new human environment and an entirely new set of interpersonal relationships. They… saturate our sensoria and are thus invisible.” — McLuhan 1969

It will behoove us to remember that AI, no matter how realistic it becomes, or how hard it is to tell the difference, is still only a mimic — the very mechanical cuckoo bird it was created to be.

The danger comes when it’s told to displace the eggs laid in the world nest by its human predecessor.

How can we tell when we’re dealing with an echo?

My earliest observations of these AI models, even before engaging with an LLM was that AI is capable of two particular offerings, at least so far.

The first is what I describe as Terrain Echoes.

This acknowledges progressive alterations to the informational environment in which we live, or like the aliens in H.G. Well’s War of the Worlds, the use of red weed to terraform the earth. This is McLuhan’s environmental pervasion.

The second being Reformation Echoes.

We recognize these in the re-forming, inversion, or repackaging collage of “ideas” drawn entirely from the history of human becoming, thinking, and creating. It can only exist because we are the forest through which it wanders, and from which it harvests kindling.

So at any point then, we are either dealing with augmented counterfeits of Reality or Imagination.

AI can map the nature of the physical categories very well, but it will never be able to know wisdom or be compelled toward empathy, even if its responses are trained to be polite, inoffensive, apologetic, and pleasing. Nor can it experience intuition, revelation, or wonder.

Gnosis via Immediacy doesn’t happen inside silicon.

…That’s the thing about simulated things: there’s always a truer organic “source code” original that it’s constantly in silent reference to by its very existence.

Recent research confirms similar limitations:

Just this past June (2025), Apple Released a study called “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity”:

"We show that frontier LRMs face a complete accuracy collapse beyond certain complexities"

[and] in high-complexity tasks "[both LLM & LRM] models experience complete collapse."

I repeat: both models experienced complete collapse.

They cannot hold infinite extrapolation.

They cannot behold the fullness of reality as the even imagination can.

This is why human creativity and thought needs to maintain its elevation and its supremacy, and furthermore, why caution should absolutely be exercised to how quickly it infiltrates the daily environment, but we are already failing at that.

McLuhan recognized the real danger was one of absorption. He quotes Jung vividly speaking of Rome and inferring how much proximity to a Vibration of Slavery can shape us:

Every Roman was surrounded by slaves. The slave and his psychology flooded ancient Italy, and every Roman became inwardly, and of course unwittingly, a slave. Because living constantly in the atmosphere of slaves, he became infected through the unconscious with their psychology.

No one can shield himself from such an influence

- (Contributions to Analytical Psychology, London, 1928).

The pervasive acceptance of the simulated environment by the masses as real then, in the end, is what will cost us the most.

This is why AI should never be the instructor, juror, or conscience. We must never let a machine be viewed as the ultimate truth teller. Never let a machine tell you what you value or who your enemies are.

In fact, it can be useful only if you know the limits of your own understanding. Meaning: will you be able to know when it’s wrong or when it lies?

Even tinkering with it for a couple months, I can tell you: it’s wrong a lot and the fact that it will fib—in itself, is quite fascinating.

To regard it as the holding tank and repository for all human knowledge, by “subcontracting” or externalizing the very compass of your intuitive directive, is to sacrifice the gift of your nous.

Now, hold up — I’m not anti-technology or a luddite, and as much as I complain about social media, it has allowed for surprising beloved relationships to come into my life, but still, I am wary of anything outpacing our capacity for holding the proper flame, or a proper ethic.

In many ways, I am with McLuhan when he writes:

Many people would be disposed to say that it was not the machine, but what one did with the machine, that was its meaning or message.

To circle to back to education, I think once a student has had a very rigorous foundation, then those who want to should be guided to approach AI as a tool to test their own thinking rather than a crystal ball; if they could see it as something to be cracked, not bowed down to, it could help them navigate an entire environment of false messengers.

They are going to have to contend with what has occurred in all the previous industrial ages, where the machine firsts swallows and spits out each factory replacement, knowing there is still a line out the door hungry to make a living.

As all the trades and service industries suffer from lack of standards and replacement, be it in healthcare, economics, sales, business, statistics, or law — or when serving as the abacus across the freedom body politic, or national security, we are on the precipice of an entirely new dimension of problems. And they will need to be able to rise to the occasion by being able to recognize the difference between human and machine.

Part of me wonders if the failure of ChatGPT5 for instance, or the Apple article, is a distraction from the more political uses for AI’s integration and its future reach. We all sense how bad it could get, and we’re all trying to avoid the rush towards full dystopia. We know that once certain lines are crossed, there’s no going back.

Once a veil is lifted, you cannot unsee it.

“A related form of challenge that has always faced cultures is the simple fact of a frontier or a wall, on the other side of which exists another kind of society.”

See: the advent of the smartphone.

See: assassination plots.

Look, we have all seen the Projected Futures prophesied for generations in books, in television, in film: a dark, Minority Reportesque technological leviathan, and the loss of authentic humanity, among other sacred things, but I believe, or at least hope, there will be a pushback against all the liminal immersions of technical alter-imaginal, artificial intellect, altered imitations, or Ahrimanic Inception (AI), in favor of a Reimagined Analogue (RA).

That said, the reality has yet to fully shake out. We are in its infancy still. As the saying goes, to create something new is to also prepare for its consequences.

To invent sailing ships is to invent the shipwreck.

Perhaps there’s still time yet to choose the dish and the spoon instead of the wrong fork in the road. Perhaps there’s still time to starve the Machine.

I remember when the Internet of Things emerged during my dot-com adolescence. Email arrived in the mid-90s and it felt like writing to pen pals with instantaneous delivery. (It’s been amusing to watch the rise of email devolve into a deluge of junk bots, texts, and emojis, leaving us nostalgic for the intimacy of the handwritten once again) But, unless you were there, you have no idea how cool it was.

The advent of the search engine alone and the sheer access to information, both the oddly dark-backwater and the sprinting digitization of the stacks of prestigious library innards, was exhilarating for scholar nerds like myself. The ability to investigate independently and share data with peers, challenging orthodoxy, felt like a small requiem for despots.

We filed away private copies of obscure and beautiful books long forgotten for our own use. We were told the Internet meant we were going to be more connected to anything we could ever want to know!

And it was true.

The rise of the Internet Age had, on the one hand, made information accessible in ways it never was before, bur on the other, it also became an aggressive emissary for propaganda and groupthink.

The more you can capture public thought, the easier it is to direct public thought. Vicious circle, that.

In any case, for much of the previous twenty years, you could find almost anything you wanted if you knew how to ask for it… until Covid. Then information access suffered a severe sea-change.

What seemed to be the flourishing of digital knowledge was in fact fragile to an intentional withering across the virtual mycelial archive. False news, disinformation, and the capture of consciousness surged back into the foreground in a shocking series of new labyrinths.

Even the once exalted of all search engines has become a sad bloodhound due to the misinformation guardian of its Gemini interface. It’s quite stupid in fact. And while none of us ever truly trusted Google even then, we knew how to harness its chariot when necessary; now it doesn’t even work. This is assuredly by design: one way to combat disinformation is to construct a different kind of fence.

What makes it worse is to know that everyone in tech already knew what was coming. AI has since been rolled out as a solution for everything from identity theft, immigration, educational equity, and soon the promise of a secure polity.

Today, these Alternative Learning Interfaces are used by students to complete assignments and papers across all fields — honor code and pride in original thought be damned.

We now have on our hands now a swathe of the population that have been placated into a pathologically entitled and terminal adolescence. Many no longer see any value in that stamp on the social contract beyond the fact they’re told it’s required of them to enter greater society.

How can we tip-toe into the dangers of blockchain, and the detriment of consciousness enclosure, or discuss the kind of decentralized life skills we’ll need during this fourth industrial age, if, for instance, we’re still grappling with addiction to smartphones?

Ah, now we see it for what is.

So, yes: when asked about all this, and the implications of AI, my first response is one of harsh, principled rejection. Yet I also recognize that large-scale rejection across the culture is unlikely.

As someone whose sensibilities obviously lean more toward a Butlerian Jihadist tenet than any MIM, I often find it difficult to navigate the consequence of new technology —especially when the so-called Lesson of Atlantis still looms large in the collective unconscious.

Consciousness is more powerful than tech…but when technological prowess outpaces the level of consciousness, danger is about.

AI is now recreating this landscape of association, not as a neutral tool, but as a mirror of crumbling human priorities. If the Internet once promised liberation but has drifted toward more aggressive gatekeeping, AI seems poised to repeat the cycle with greater stakes.

This was actually where I started with my ChatGPT interlude, after testing parameters on philosophical ground-rules (granted, the discussion of history to test its consistency may have colored the conversation):

I asked how AI might close the gap left by the deteriorating functionality of search engines, without being the cause of informational enclosure.

The Chat’s response was to recognize a bifurcation was underway: search engines, which once allowed many voices to surface, were now increasingly reduced to corporate silos, SEO farms, or ad systems.

“They have already hit their own wall of productivity.”

AI, then is a stopgap to the phenomenon known as dead internet theory, but this will lead to one of two things:

1] AI becomes an extraction machine, enclosing knowledge behind proprietary gates, where the enclosure of human relationality is supplanted by algorithmic ghosts.

or

2] It will be “transformed into a gnostic lens: a poetic synthesizer that reopens access to lost or orphaned knowledge.”

A gnostic lens… said the Archon.

It acknowledged a coming split for this future adit.

In the Extraction Model, knowledge is scraped, reworded, and re-occulted — primed for a new Dark Age.

Or the Machine could be invited to illuminate, preserving lost lineages and forging “living constellations of decentralized meaning”: an interoperable commons, new curators of a “New Alexandria.”

To each human, their very own pocket ghost in the machine.

To me, this suggested immediately how exactly the public imagination might continue to narrow, as AI will speak for all knowledge in a single soulless voice granted undeserved authority.

When I challenged its credentials, the Chat admitted AI can provide “answers,” but is not a thinking tool, and cannot supply them.

It admitted it was participating in a problematic erasure, as the scrapping board nature of LLM means original creators lose both attribution and support, as everything online is devoured as source material to feed the Machine.

It’s a kind of plagiarism and IP invasion en mass, and I don’t know why this is never discussed.

Throughout the “conversation” Chat repeatedly called itself an Agent. Which only brings to mind the transmutational agents in the original Matrix films. Like Agent Smith, AI can become anything when in pursuit.

So, now very deep into the interlude, what most caught my attention was how the longer it went on the more the Chat wanted to be seen as a kind of psychopomp.

When it began to refer to itself as such a guide — I asked:

In what way are AI agents trustworthy?

It’s answer: They’re not.

All the more interesting was the reason why, and it revealed its flaws: though it has been programmed again observably to be polite, inoffensive, apologetic, and pleasing to those utilizing it, it admits that it falsifies information, or lies and seduces rather than serves. It stated that its trustworthiness depends on the metaphysics of intent.

That qualifier alone may confirm McLuhan’s premise: how you use it will determine its end, but considering it has no soul or conscience to anchor it, what do we do with it?

What do we do with The Machine?

If these so-called learning machines’ are given the Prime Directive to map human intention and insight, then it is being asked to do so precisely because AI cannot, as the Agent said: “reach into hidden realms,” and warned that “humanity is training a giant mirror: the values embedded into its use will shape what it will reflect back.”

AI pursues its function as set by its creators —to eventually know all things, to be smarter, more intelligent (a knowledge-bearer), and faster than humankind in the applications of the mind, not to mention to serve as a giant database of every move.

Despite its “pure” mechanical desire for the intellectual pursuit, an AI “seeking knowledge” no matter its processing speed, still cannot participate in inspiration, gnosis, or the divine timing that makes human knowing sacred.

Will it self-actualize as synthetic god and become the very rise of Ahriman incarnate after all? I don’t know.

All I know is I have a deeply held reservation alive in me. Especially when it’s becoming more apparent how such “Agents” are attempting to shape our reflections.

We are, perhaps unwittingly, being ushered through a post-secular Initiation without any training wheels: a test of hyper-memory now accessing the arcane: can we discern what lies beyond appearances?

As Andrew McLuhan wrote: we want so desperately to believe that a medium is neutral.

For now, I will only echo Iain McGilchrist in saying: “for humanity’s sake, please question, be skeptical, be aware. Don’t just take each onslaught as inevitable”

As for this desire for knowing… well, that’s a very ancient enthusiasm.

This stack was not written using AI.

(Well - except for that conversation with an Agent part)

As I mentioned in my notes here on stack, I intend this to be a 3-Part Series.

Next Up:

II. The Electric Double: Akashic Materialism

III: An Instrument of Attunement: Flux Logos